What is Scriptless Test Automation and how can we use TestProject to do this? In the previous blog https://icehousecorp.com/automation-testing-and-how-to-start/, we discussed how test automation could significantly benefit the testing process. This time, I will explain one of the test automation methods, a scriptless test automation which will be helpful for software tester who does not […]

Preserving Quality in Software Development

What is Software Quality?

Not just a software, each product in the world has some kind of standards or measurements to be considered as a good quality product. According to experts, Philip B. Crosby, an influential author, consultant and initiator of the Zero Defect concept, “Quality is conformance to requirements” (1). Joseph Juran, widely known as a founder of many key quality management programs and a founder of the Juran Institute, a consulting company which provides training and consulting services in quality management, defined quality as “Fitness for use” (2).

In software development, quality has been defined as:

- Customer determination, not an engineer’s, not a marketing, nor a general management determination. (3)

- It is based on the customer’s actual experience with the product or service, measured against his or her requirements. (3)

- Meet the needs of customers and thereby provide product satisfaction. (4)

- Freedom from deficiencies. (2)

Who is the customer here? Customer is the user who interacts or uses the software product itself.

How to Measure a Software Quality?

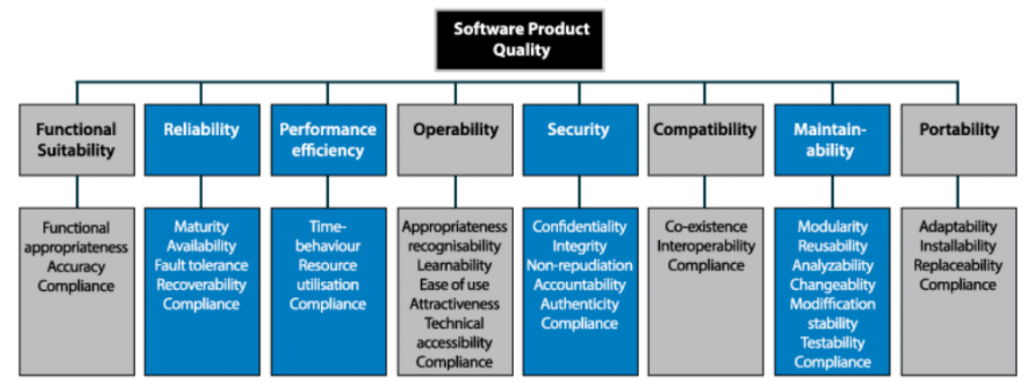

There are numbers of books, articles, concepts around what are attributes or characteristics that need to be measured to define a quality of a software product. One of them is CISQ’s quality model that we will briefly discuss in this article to provide some overview or intro to desirable characteristics of a software product.

According to CISQ (Consortium for IT Software Quality), there are 8 characteristics of software product quality as pictured on the diagram above (4). Beside the Functional Suitability, 4 out of 7 non-functional characteristics (highlighted in blue in Picture 1) are main characteristics which are commonly measured.

- Functional Suitability, a measure of features functionality to ensure the features completeness and correctness meets user’s requirements and objectives.

- Reliability, a measure of a software stability for a certain period of use, to ensure that software is failure-free and not causing any outages, unexpected behavior, data corruption, or other problems.

- Security, a measure of a software to uncover its vulnerabilities, to ensure no access by unauthorized persons, data leak, or other malicious acts.

- Performance Efficiency, a measure of a software performance and its efficiency of consuming hardware resources, to ensure it has good responses on operational load and not causing an excessive use of processor, memory, or other resources.

- Maintainability, a measure of how easy the software can be understood and maintained by other developers. Software with low maintainability will result in excessive maintenance time and cost.

How Do We Preserve Software Quality?

If we take a look at the characteristics mentioned above, all of them are very closely related with and defined by three main items, which are requirements maturity, good architecture and coding practices. Achieving quality is not a trivial task and not with a low cost. It requires a number of proper planning, discipline and monitoring, and it should be considered from the very beginning of the software development process.

According to the report of “The Economic Impacts of Inadequate Infrastructure for Software Testing” prepared for National Institute of Standard and TEchnology, US., a cost to repair defects when found at coding time is 5 times of the cost when defects found at requirement analysis time. The cost of fixing is getting higher when defects are found at the later stage, 10 times when they are found in the testing stage, and 30 times when found in Production. (5)

“Quality is free, but only to those who are willing to pay heavily for it.”

T. DeMarco and T. Lister

“Quality is never an accident; it is always the result of intelligent effort.”

John Ruskin

At Icehouse, we realize that software with a good quality cannot just be achieved by only QA testing the final product and finding defects prior to going live, but also having a good development process which absorbs a quality culture and having it implemented across all teams. Quality becomes everybody’s responsibility, not just one particular team.

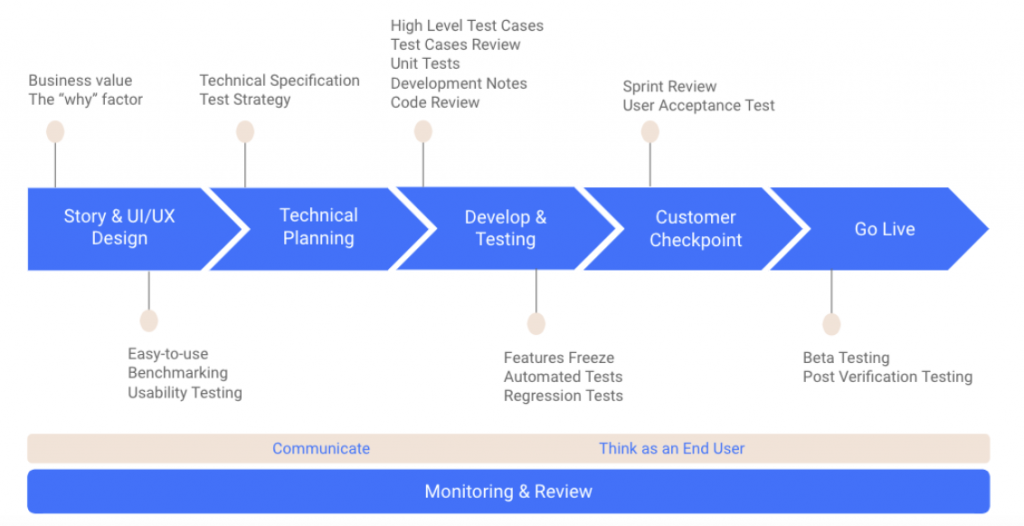

To show how we preserve quality at Icehouse, I will explain a short version of our sprint (agile) development process, and highlight only key items we do on each process to preserve quality, and in the last section I will describe the type of testing and tools we used to measure the quality of our deliverables.

Development Process

Story Design and UI/UX Design

This is the very first stage of our development process where Business Analysts (BA) discuss with a client to gather requirements and create the stories for it. Once the stories are ready, a Sprint Grooming session will be held where BA explains the stories to Developers and Quality Analysts (QA). The key items in this Sprint Grooming are:

- All attendees should be ready, meaning that they have read the stories and are ready with a list of questions to be asked or discussed in the session.

- Questions or discussions should not just revolve around unclear story descriptions, but should also cover missing user scenarios, missing business value or the ‘why’ factors, etc.

UI/UX design activity is performed pretty much at the same time with story design. Our UI/UX designer works closely with our BA. The key items here are:

- Focus on user journey and easy-to-use factors.

- Benchmarking.

- Validate the design with the real users by performing usability testing.

Technical Planning

Technical planning is a very important activity since all deliverables from this stage will be used as a reference for the implementation stage for both development and testing. The key items here are:

- Technical specification, is a document which describes system architecture, frontend and backend components, deployment and security architecture.

- Test strategy, is a document which describes the scope of testing, test environment architecture, testing methods and tools, test cases management, defect management, assumption and test dependencies.

Development and Testing

Development and testing activities are very tightly coupled and can be seen as one big process in our sprint. The key items here are:

- High level test cases, created by QA for each story prior coding starts. The objective is to help Developers understand what are the scenarios that will be tested against their code.

- Test case review, performed by other QAs and BA to ensure good test cases coverage.

- Unit tests, created by Developers to spot issues at the very early stage, avoid unnecessary effort of raising defects for QA and defects fixing for Developers itself. The high level test cases can be used as a reference for unit tests.

- Code review, performed by seniors Developers to ensure the code structure follows the pre-defined architecture and coding best practices.

- Development notes, created by Developers for each story to explain briefly what have been changed and what are the impacts to existing features. The objective is for QA to understand the impact of the changes and what needs to be tested as part of the regression test.

- Features freeze, is usually conducted 2 days prior to sprint completion, to ensure QA has enough time to test remaining defects and do regression in order to achieve a stable version of sprint delivery.

- Automated tests, run on development or staging environments as much as required.

- Selected regression test, is a regression test of scenarios which are not covered on automation, performed by QA for each sprint on staging (production-like environment). In this stage QA needs to also ensure the regression test covers all changes mentioned on development notes provided by Developers.

Customer Checkpoints

It is important to involve the customer during the sprint. One of the customer checkpoints is, upon sprint completion, to check that the sprint deliverables meet their expectations. After a sprint is completed, we perform the following activities:

- Sprint review to demo sprint deliverables and inform the list of known defects to the customer, to take feedback from them, and to discuss defects fixes priority with them. Feedback can be then groomed to be an improvement or story and scheduled to be implemented on the next sprints.

- Support user acceptance tests (UAT), and work closely with the customer during their UAT to resolve any blocker immediately and ensure smooth UAT.

Go Live Preparation, Deployment and Post Verification Test

Once deployment has been planned and scheduled, we performed the following:

- Regression test on end-to-end scenarios, performed by automation script and QA manually on staging (production-like environment).

- Beta testing of production build, performed by QA prior publishing mobile applications in the Google and Apple stores.

- Post verification testing on production environment, performed by QA after deployment into production is complete.

Monitoring & Review

As in other types of projects, in a software development project, monitoring and post-mortem review are always part of the process and become essential for immediate improvements in the current sprint and long-term improvements in the future. Key items we monit at Icehouse are as follows:

- Daily standup, to identify slow progress tickets and blockers, and which team members are able to help resolving them. This is followed up with separate sessions/discussion among selected team members for the solutions.

- Real time velocity report, provided by Jira to help PM understand the progress of sprint and replan development resources and prioritize stories whenever required.

- Real time test results report, provided by TestRail to help test lead and PM understand the progress of testing, and if required, replan testing resources and prioritize test scenarios.

- Defect meeting, to prioritize defects fixes with customers; this can be done in Sprint Review or separate sessions as required.

- Sprint retrospective, to discuss openly between project’s team members on what has been done well and what still needs to be improved.

- Root cause analysis, conducted whenever the number of defects found during QA testing (or UAT) was considered as out of standard. The objective is to understand the root causes and find the solutions to avoid it in the future.

Lastly but not least, of course any project will not be successful without continuous communication between the project’s team members during the entire sprint. As a rule of thumb we also remind each other to keep the idea of “Think as an end user” in each of our activities.

Software Testing Types & Tools

Following are the types of testing we have done for various types of applications we built in the past.

| Testing Type | Description | Test Examples | Tools | Related Quality Characteristics |

| Unit Test | Implemented in order to identify defects at the earliest stage and reduce the number of defects that are delivered to the QA team, therefore improving the overall development + testing time and effort. Monitoring is done by measuring unit test coverage. | SonarQube | Maintainability, Functional Suitability | |

| Code Analysis | Continuous inspection of code quality and automatic reviews to detect code duplication and code smells. | SonarQube | Maintainability | |

| System Test | System test including integration test with related third party systems was performed to ensure smooth experience of the end to end user journey. | Integration test with Dana, Midtrans, Tokopedia and Bukalapak. | Functional Suitability | |

| Automation Test | Automation test to speed up regression test which can be run by QA or Dev. | Full coverage of API automation tests with Postman of a banking transaction system on a POS terminal. | Postman, Newman | Functional Suitability |

| Load Test | Test was conducted by loading up the system to the target concurrency (operational load). The aim is to meet performance targets for availability, concurrency or throughput, and response time. | High load test on a banking transaction system, up to 500 concurrent users with 30 minutes duration, resulting in up to 200+ API requests/second.High load test on transport and logistic app, up to 2000 concurrent users with 1 minute duration, resulting in up to 100+ API requests/second. | JMeter | Performance Efficiency |

| Stress Test | Test was conducted by providing a very high load to the system to determine the upper limits or sizing of the infrastructure. Usually used if future growth of application traffic is hard to predict. | Stress test on banking transaction system, up to 1000 concurrent users with 3 hours duration, resulting up to 300+ API requests/second. | JMeter | Performance Efficiency |

| Stability Test | Test was run over an extended period of time on an operational load. Intended to identify problems that may appear only after an extended period of time, such as a slowly developing memory leak or some unforeseen limitation which occurs after a number of transactions were executed. | Stability test on transaction features, up to 50 concurrent users with 3 days duration, resulting in 99% percentile of response time to occur within less than 700 ms. | JMeter | Reliability |

| Scalability Test | Load tests were conducted to measure the system’s capability to scale up or scale down. The test can be performed by setting thresholds to trigger automatic scaling and run the load test to see if the scaling works and the system is in a good shape (throughput and response time value are within an acceptable window) once the threshold is reached. | Scalability test on transaction features. The backend services were tested and scaled for up to 4 instances of a service. | JMeter | Reliability |

| Security Test | Test was conducted to identify the threats in the system and measure its potential vulnerabilities, so the system does not stop functioning and can not be exploited. | Partnered with Madison Technologies & Former Chief Security Information Officer for Go-Jek to complete independent third party security testing for International Banking clients. | Security |

Key Takeaways

- The main quality characteristics of a software product that are commonly measured are: Functional Suitability, Reliability, Security, Performance Efficiency, Maintainability.

- Achieving quality is not a trivial task and not with a low cost. It requires a number of proper planning, discipline and monitoring across all units.

- Delaying quality check and defects fixing would lead to significant increase of cost.

- Quality measurement is just a tool to measure the quality but not the main ingredients that can improve the quality itself.

- Having a good process and a quality culture helped in maintaining quality.

References

- Crosby, P. B. “Quality is free”, McGraw-Hill, 1979.

- J.M. Juran, “Juran’s Quality Control Handbook”, McGraw-Hill, 1988.

- A. V. Feigenbaum, “Total Quality Control”, McGraw-Hill, 1983.

- CISQ Conformance Assessment Method: Quality Characteristic Measures, CISQ–TR–2017–01, 2017.

- The Economic Impacts of Inadequate Infrastructure for Software Testing Final Report, Report 02-3, 2002.

More Articles