How save time with the new Swift Charts feature in Swift WWDC 2022 brought new updates to the community-beloved native language for the Apple environment. Swift is back with a lot of improvements and new capabilities to help developers deliver world-class apps with ease by enhancing swift charts for data. In this blog, we’re checking […]

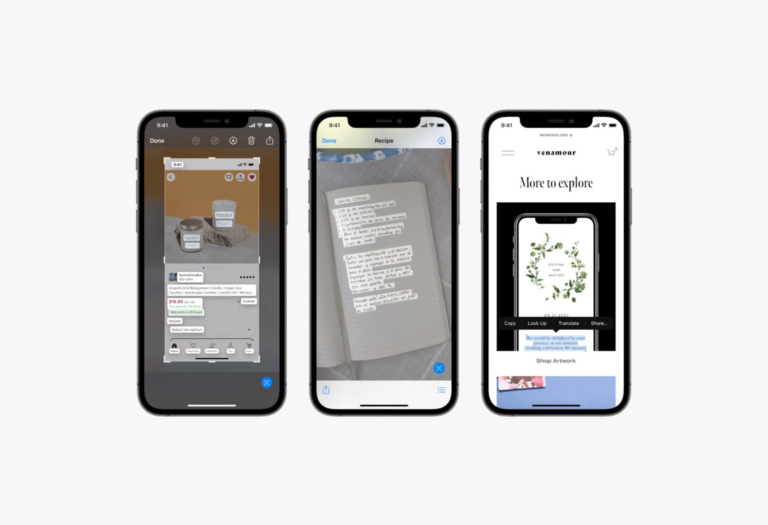

Bring LiveText to Your iOS Application

Read text from images using ImageAnalyzer and DataScannerViewController to help users save time

Overview of LiveText

At WWDC 2022, VisionKit framework got new APIs for text and QR code image / live video stream recognition. Both belong to the LiveText API. This API enables the developer to interact and recognize images on the app, or any image taken from a live camera on the iPhone, grabbing the data, and doing something with it.

In this specific article, Apple released a new component, called `ImageAnalyzer` and `ImageAnalysisInteraction` and also DataScannerViewController inside the `VisionKit` framework. This component helps us, the developer, to recognize text based information inside an image, grab the data, and do something with it.

Implementing LiveText using `ImageAnalyzer` and `ImageAnalysisInteraction`

The components, `ImageAnalyzer` and `ImageAnalysisInteraction` belong to `VisionKit` framework, so we need to import that framework first.

The next thing we need to do is to declare both our `ImageAnalyzer` and `ImageAnalysisInteraction` instances. In this example, let’s declare those instances as class properties.

Since we are going to interact with the Live text from an image, interaction needs to be added to the image. Make sure that we add this line.

Then, we need to configure the `interaction` and `analyzer` when the image is set. First, we need to determine which `preferredInteractionTypes` are available. Second, we need to reset the previous analysis. Last, we need to start analyzing the new image.

Explanation

- `.preferredInteractionTypes` configures what type of interaction can be performed on the image (only text, or others). In the code above, we tried to reset it. Set it to an empty array to disable the interaction.

- `.analysis` is the analysis result. We need to reset it before performing another image analysis.

- Analyzing the image.

Tip: We can simplify the reset intention by providing an extension function as a helper for the `interaction` instance.

Now, let’s talk about analyzing stuff. The `analyzeCurrentImage()` function, is a helper function that contains logic to analyze the image. This is the part when we perform the analyzer, wait, and get the analysis result.

Explanation:

- Check whether there is an image to be analyzed.

- Create a configuration instance for the analyzer

- Analyzing the image based on configuration and getting the analysis result

- Checking whether the result is not empty and if the image still exists (since it is an async process)

- Assign the analysis result into the `interaction` instance, so that it can be used by someone else

- set the `.preferredInteractionTypes` into system default interaction

Implementing Live Text API using DataScannerViewController

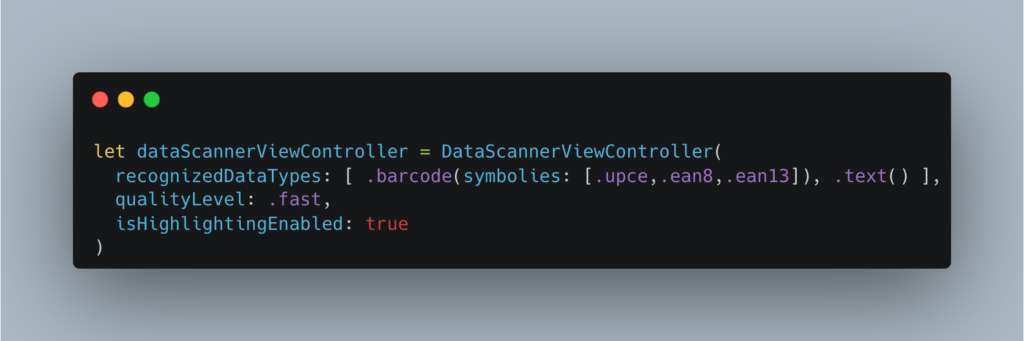

The components, `DataScannerViewController` and `DataScannerViewControllerDelegate` belongs to the `VisionKit` framework, so we need to import that framework first.

The next thing is that we need to start scanning or configuring and activating the object recognizer through the `DataScannerViewController` instance.

The code above is instantiating the `DataScannerViewController` with a given specific configuration :

- only recognizing a barcode with specific criteria given

- can recognize any kind of text

- great quality level and enables the highlight (these are not mandatory)

Basically, we need to tell this controller to recognize the data types: only barcode, only text, or both of them.

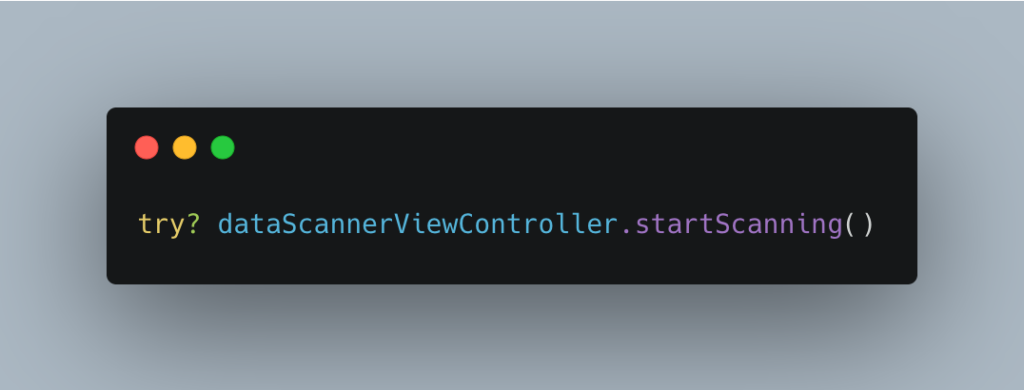

Then, let’s set the delegate so that this viewController can talk to other components.

After that, don’t forget to tell the controller to start scanning.

Next, let’s implement the `DataScannerViewControllerDelegate` function, `didTapOn item` to a certain component, let’s say, to your viewController, `MyViewController`.

This `didTapOn item` function will be called once you tap the scanned item which the VisionKit recognized and focused at that moment. Then, it’s up to us what to do with the text received from the delegate. For example, delegating the text into the View, or other cool stuff.

Give LiveText a try!

Now, you can try and run the app, and you will see that there is a Live Text button on the bottom right corner of the Image View. You can try to interact with the button to recognize the data from the image. For a code example, you can visit our GitHub, specifically for this repository here.

Conclusion

Recognizing text on an image using the new VisionKit framework is really interesting. On this new API, we gave the VisionKit an image to be analyzed, and VisionKit will render the analysis result into the `UIImageView` instance, so that we can interact with the Live Text as a user.

References

Enabling Live Text interactions with images

Add Live Text interaction to your app

Scanning data with a Camera

Capture Machine Readable Codes and text with VisionKit

GitHub repository

Showing responses for

Bring LiveText to Your iOS Application

More Articles